Snapchat Accessible AR

HackathonLens StudioJavaScriptTypeScriptHand Tracking3D GraphicsComputer VisionSign LanguageAccessibilityLA HacksAugmented RealitySnapchat SpectaclesASL

Sunday, April 27, 2025

Role : AR/ML Engineer & Team Lead | Hackathon : LA Hacks 2025 (Finalist)

TL;DR

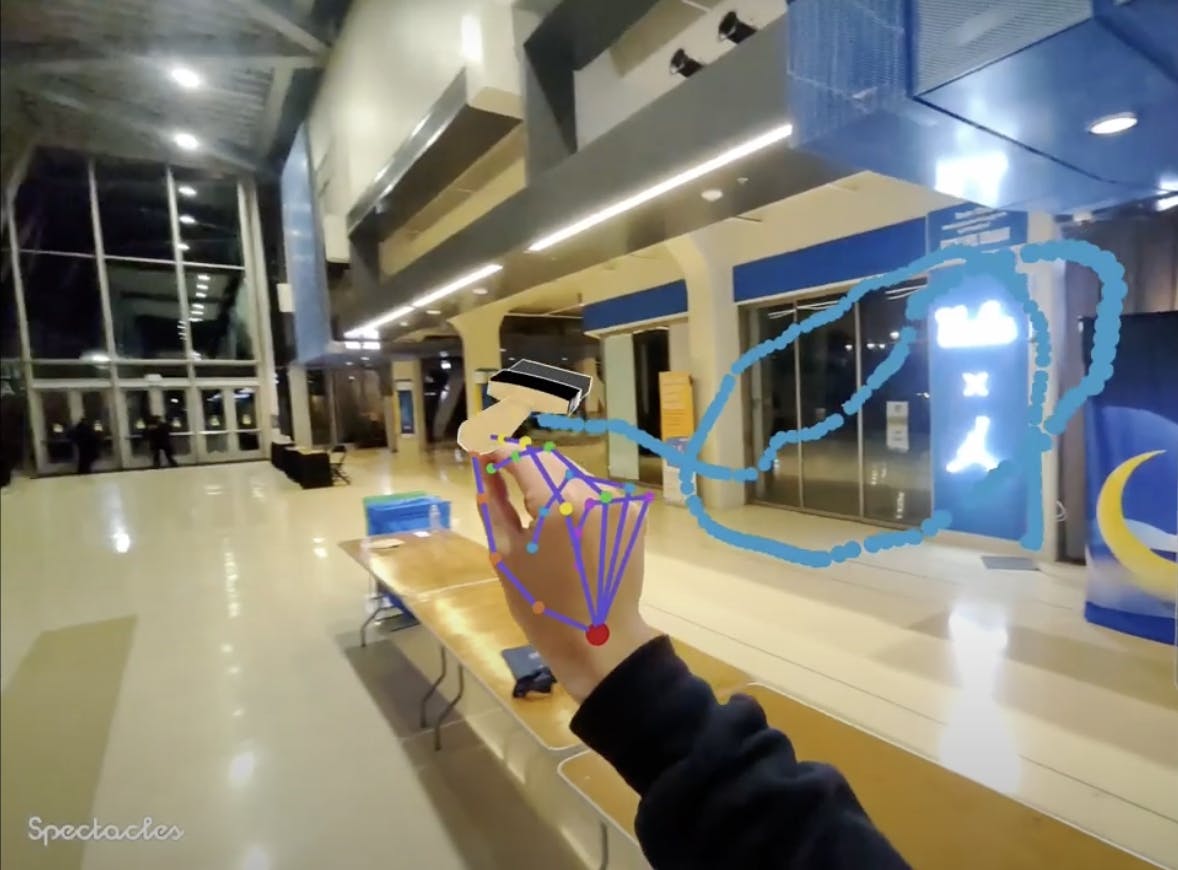

Accessible AR turns American Sign Language into a controller: sign a word, and a physics-enabled 3-D object appears on the real-world surface in front of you.

Built with Lens Studio, Snap Spectacles Gen 4, and a custom prefab/physics stack, the project shows how AR + AI can empower Deaf/HoH creators.

Inspiration

Augmented reality is poised to become AI’s everyday interface, but most tools assume voice or touch. We asked: “What if you could build worlds just by signing?” Accessible AR answers by mapping ASL gestures to dynamic object creation, making spatial computing more inclusive.

Key Features

- ASL Gesture Recognition: ObjectTracking3D measures hand-joint distances to classify signs at ~90 % accuracy

- Prefab-Based World Building: Reusable templates spawn physics bodies, colliders, and interactions on demand

- World-Mesh Physics: Rigid bodies land, bounce, and slide on Spectacles’ live surface mesh

- 3D Painting Mode: Pinch to “spray” colored voxels in mid-air

- Gesture-Controlled RC Car: Spell “C-A-R” to spawn and steer a vehicle with hand poses

- Movable Tutorials: Pinch-and-drag UI panels keep instructions always visible

Tech Stack

- Lens Studio 5 (JavaScript + TypeScript)

- Snap Spectacles Gen 4 hardware & world-mesh API

- ObjectTracking3D for ML-powered hand tracking

- Physics for rigid-body dynamics & collisions

- Custom prefab/event system for pooling & runtime instantiation